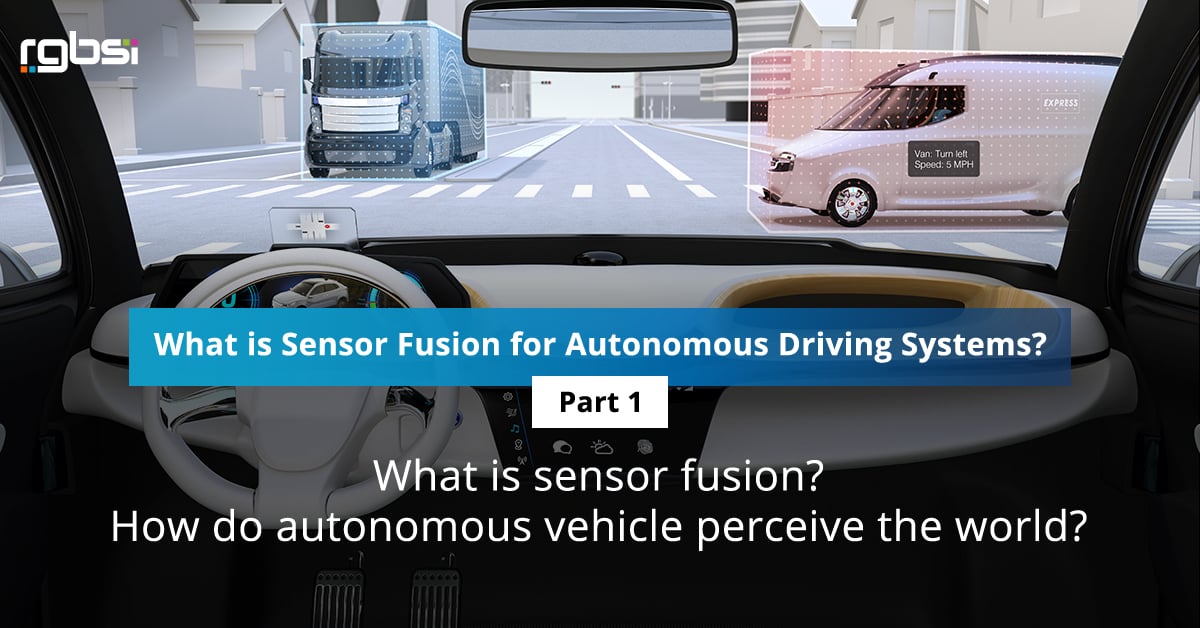

This is a two-part series that dives into sensor fusion in relation to autonomous driving systems. In part 1, we will look at what is sensor fusion and how autonomous vehicles perceive the world.

Autonomous vehicles (AVs) use complex sensing systems to evaluate the external environment and make actionable decisions for safe navigation. Just as people use senses such as sight, sound, taste, smell, and touch to understand the world around them, autonomous vehicles do the same through sensor fusion.

To enable the 6 levels of driving automation with assisted and automated technology, vehicles contain several sensors such as LiDAR, RADAR, ultrasonic, and camera to understand their environment. Each type of sensor has its strengths and weaknesses. That is why sensor fusion is imperative for autonomous driving systems.

What is sensor fusion?

Sensor fusion is the process of collectively taking inputs from RADAR, LiDAR, camera, and ultrasonic sensors to interpret environmental conditions for detection certainty. It’s not possible for each sensor to work independently and deliver all the information needed for an autonomous vehicle to operate with the highest degree of safety.

Using multiple types of sensors together allows autonomous driving systems to experience the benefits of sensors collectively while offsetting their individual weaknesses. Autonomous vehicles process sensor fusion data dependent on algorithms programmed. This allows self-driving vehicles to form judgements to take the right action.

How do autonomous vehicles perceive the world?

Autonomous driving systems use 4 core machine learning functions to continuously understand their surroundings, make informed decisions, and predict possible changes that could intervene with a route. Driving automation is accomplished through using a combination of autonomous vehicle technologies and sensors.

- Detect: use of camera, LiDAR, and RADAR sensors to obtain information on the external environment such as free driving space, obstructions, and future predictions.

- Segment: with the detected data points, alike points are clustered together to identify categories such as pedestrians, road lanes, and traffic.

- Classify: uses the segmented categories to classify objects that are relevant and rule out those that are irrelevant for spatial awareness. An example is identifying available space in the moment on the road to drive without accident.

- Monitor: continue monitoring relevant classified objects within the local vicinity to predict the next action through the entire journey.

Next week we will cover types of sensors and sensor fusion approaches in “What is Sensor Fusion for Autonomous Driving Systems? – Part 2”. Stay tuned!

Reference part 2 of the series: What is Sensor Fusion for Autonomous Driving Systems? – Part 2

About RGBSI

At RGBSI, we deliver total workforce management, engineering, quality lifecycle management, and IT solutions that provide strategic partnership for organizations of all sizes.

Engineering Solutions

As an organization of engineering experts, we understand the importance of modernization. By pairing modern technology with design expertise, we elevate fundamental engineering principles to accommodate growing product complexity requirements. We work with clients to unlock the full potential of their products and enable future innovation. Learn more about our automation and digital engineering services.