This is a two-part series that dives into sensor fusion in relation to autonomous driving systems. In part 2, we will look at types of sensors and sensor fusion approaches.

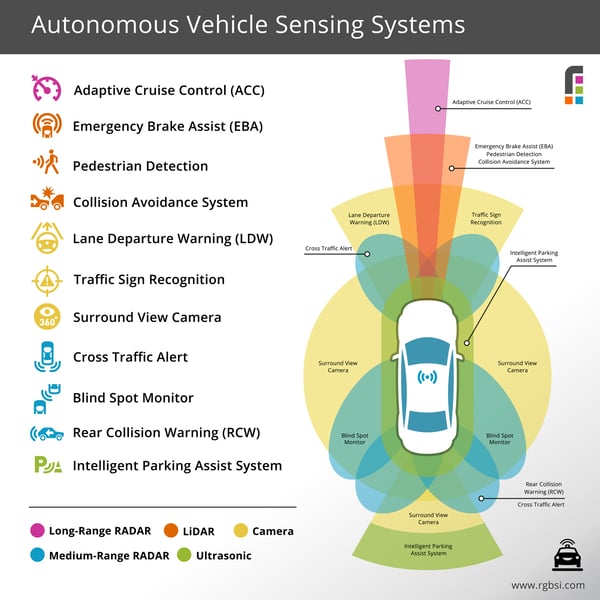

Sensors are a necessity for autonomous vehicles to function. They allow vehicles to understand the world and process information to go from one place to another safely. The most common types of sensors automakers use are LiDAR, RADAR (long-range and medium-range), camera, and ultrasonic.

Types of Sensors

LiDAR Sensors

LiDAR sensors use lasers to scan the external environment and distance from objects through the speed of light to generate multiple data points to turn into point clouds. Autonomous driving systems use LiDAR to analyze lane markings or detect road.

LiDAR Applications in AVs:

- Emergency brake assist (EBA)

- Pedestrian detection

- Collision avoidance System

Pros

- Long range detection (more than camera)

- Track movement and direction of objects

- High resolution 3D modeling (better than RADAR)

- Unaffected by darkness or bright light conditions

Cons

- Poor visibility in fog, dust, rain, or snow

- Sensitive to dirt/debris

- Bulky in size and moves to function

- Expensive

RADAR Sensors

RADAR sensors emit electromagnetic (radio) waves to gather more information on objects velocity, range, and angles through the speed of reflected waves returned. This is nothing new for the automotive space; police have been using this in their vehicles to detect and regulate vehicles surpassing the speed limit.

RADAR Applications in AVs:

- Adaptive cruise control (ACC) (long-range)

- Cross traffic alert (medium-range)

- Blind spot monitor (medium-range)

- Rear collision warning (RCW) (medium-range)

Pros

- Unaffected by weather conditions

- Unaffected by lighting and noise

- Detects objects at long distances

- Works best for detecting speed of other vehicles

- Inexpensive

Cons

- Limited depth and may falsely identify objects

- Can’t detect small objects and may overlook objects

- Low-definition modeling of objects

Camera Sensors

Camera sensors capture high resolution images and videos from different angles to obtain visual data of things that can only be “seen” like traffics signs, animals, and road markings.

Camera Applications in AVs:

- Lane departure warning (LDW)

- Traffic sign recognition

- Surround view camera

Pros

- Easily distinguish shapes and color to classify objects

- High degree of environmental sensing

- Recognize 2D information through high-definition images

- Small size for discreet placement

- Inexpensive

Cons

- Poor visibility in harsh weather conditions like snowstorms

- Limited contrast and no depth perception

- Affected by extreme light shifts such as dark shadows or bright lights can obscure object detection

- Don’t provide quantifiable distance information

Ultrasonic Sensors

Ultrasonic sensors emit pulses of sound to detect large and solid objects nearby through the speed of echoes returned. They are used to measure objects that are in proximity such as curbs or other vehicle while parking.

Ultrasonic Applications in AVs

- Intelligent parking assist system

Pros

- Most accurate for objects in close proximity

- Unaffected by color of objects

- Works well in the dark

- Precise at measuring parallel surface distance

- Compact and inexpensive

Cons

- Can’t detect soft objects well

- Inaccuracy occurs with temperature shifts

- Limited by short range detection

Sensor Fusion Approaches

For autonomous driving systems to experience successful sensor fusion, data from multiple sources must be pieced together correctly. For example, information received from a LiDAR sensor and camera sensor must somehow be verified that each data set is informing about the same object.

There are 3 primary sensor fusion approaches to associate data into interpretable information for autonomous vehicles to perform perception functions.

- High level fusion (HLF) – each sensor carries out its function independently and data gets fused together only after its processed independently.

- Low-level fusion (LLF) - raw data from each sensor is fused at the initial point of detection. No data gets filtered out, so all information is saved to improve obstacle detection accuracy.

- Mid-level fusion (MLF) - this is a hybrid of HLF and LLF approaches. Only targeted information points are fused together at the low level (raw data) from each sensor. For example, the targeted feature could be fuse camera object detection and LiDAR object detection at the raw data level on bright day to counteract individual sensor weaknesses and classify a pedestrian crossing the road.

Conclusion

Sensor fusion capabilities are a vital component for autonomous driving systems to perceive the world accurately. Sensors such as LiDAR, RADAR, ultrasonic, and camera sensors can individually carry out some detection functions very well standalone, while others can be more of a challenge to perform due to capability weaknesses. Aggregating the strengths across sensors produces higher quality information for autonomous vehicle to run safely.

Reference part 1 of the series: What is Sensor Fusion for Autonomous Driving Systems? – Part 1

About RGBSI

At RGBSI, we deliver total workforce management, engineering, quality lifecycle management, and IT solutions that provide strategic partnership for organizations of all sizes.

Engineering Solutions

As an organization of engineering experts, we understand the importance of modernization. By pairing modern technology with design expertise, we elevate fundamental engineering principles to accommodate growing product complexity requirements. We work with clients to unlock the full potential of their products and enable future innovation. Learn more about our automation and digital engineering services.